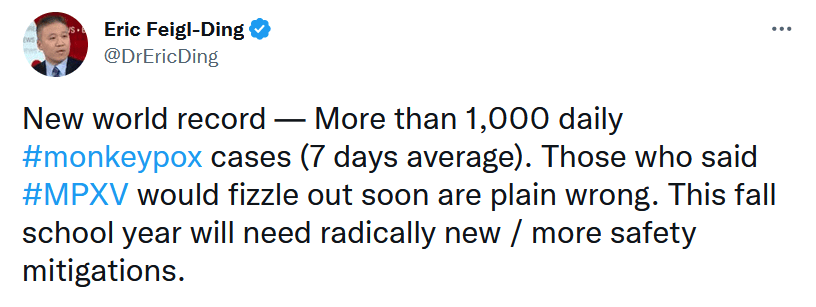

Oh the halcyon days of three months ago. I was younger, the air was warmer, and twitter was aflutter with hysterical reports on how Monkeypox was rampaging through the population and would soon run wild through schools as soon as the fall semester began

Today Monkeypox is in the rear-view mirror. The vaccine rollout was stymied by some impressively bad government bureaucracy, but the vaccines worked, the virus was only as contagious as the experts said, and spread almost solely through the populations the experts said it would. It was never airborne, and it never ran rampant the way doomers thought (hoped) it would.

Speaking of the distant past, remember Credit Suisse?

A few weeks ago, Credit Suisse was supposed to have a “Lehman Brother’s” moment, their debt was so extraordinary that they were destined to collapse, taking the global banking system down with them. There’s up 20% in the last month and show no signs of default.

Remember Shanna Swan? She’s made headlines claiming that the human race could go extinct due to chemicals in our environment destroying male fertility. Personally I knew this claim was bunk from the moment I read it because biologically speaking, human reproduction is basically identical to all mammalian reproduction. If human fertility really was plummeting due to the chemicals in our environment, then other mammals (the cows we ranch, the dogs and cats we live with, even the rats that infest our subways) should have also seen plummeting male fertility due to their bad luck of sharing the planet with us. Yet somehow no overall drop in mammalian fertility was recorded, this catastrophe only affected humans. No ranchers complained of an inability to fertilize their cows, no reduction in stray dogs and cats was reported due to drop in male fertility, this was somehow the one biological process that humans and no other mammals were subject to. It turns out there was a good reason for that because her whole doom prediction was junk and rested entirely on flawed assumptions.

I’ve grown pretty tired of the endless predictions of collapse doled out by social media. Every week it seems there’s another new thing that will destroy us all but when life carries as normal none of the prediction-mongrels ever admit they were wrong. There’s more than enough actual bad things out there without social media taking misunderstood factoids and extrapolating the complete worst-case scenario out of it all. I’d like to have some more accountability for prediction-mongers but social media makes that impossible as by the time some coming catastrophe can be conclusively proven false, a new one has been conjured up in its stead. Repeat ad nauseam, giving constant predictions of collapse and using any downturn of any kind as evidence for your accuracy. It’s just tiring.